AI is not always useful: Key differences, practical applications (automation, data analysis) and limitations (bias, cost, complexity) reveal the reality behind the myths.

Not all AI are bad – there are some that are bad

In the vast circus of artificial intelligence, where new gurus, magic tools and promises of digital revolution appear impulsively every day, it’s time to clarify the situation a little. This article is not a hymn to technology or a nostalgic denial of progress: it’s an invitation to reflect. To distinguish what works from what simply shines. To understand that not all AI is dangerous, but some of it is. And this intelligence, artificial or not, only makes sense if it is used for the benefit of those who use it wisely.

The Great Collective Delusion: “AI as a Magic Wand”

Artificial intelligence is everywhere. In newspaper headlines, at company meetings, in LinkedIn talks, and even in bars, you can now hear people saying, “With AI, we’ll do everything, and AI will take care of the rest .” And that’s the problem. There’s a huge collective delusion hanging over our heads like a cloud of technological smoke: the idea that AI is some magical, intelligent, sentient entity in the human sense that can solve everything, replace everyone else, and, why not, save us time, money, and stress.

Unfortunately, this is not the case. And it will not happen tomorrow either.

The word “intelligence” deceives us

The fundamental error is in the name: “artificial intelligence.” A name that conjures up images of HAL9000, The Terminator, Her, and every other sci-fi fantasy that has seduced us over the last 50 years. But the word “intelligence” is wrong. Or rather, it is culturally misunderstood.

Because the AI we have today – which writes, guesses, calculates, speaks and responds – is not intelligent in the human sense of the word . It does not understand, it does not think, it does not feel, it has no conscience, it has no intentions. It works with patterns, probability, statistics. It is very good at completing sentences, drawing diagrams and finding solutions to the problems it encounters. But it does not think. And it did not do so because it did not need to: it was not born to do so.

When we say AI, we’re talking about a tool , like a blender. Only instead of blades and machines, it uses mathematical models and data sets. It’s software, not an oracle.

But why does it seem so smart?

Because we are the ones projecting intelligence onto it. We need to interpret its behavior as human because it reassures us. When ChatGPT treats us well, we treat it like a colleague. When Midjourney makes our covers, we call it an artist. But I am a cognitive illusion . Biased. Projection. It feels like we fell in love with this printer because it produces beautiful calligraphy.

It’s not what AI does, it’s how we see it . And here, unfortunately, popular culture has done even more damage than the NSA: it has led us to believe that “artificial intelligence” means a robotic mind with a will. But no: it’s just a complex system optimizing functions. Period.

Those who sell AI are often selling smoke

And it is precisely on this misunderstanding that the most toxic marketing of recent years is based: marketing that offers AI as a universal solution . It can be found in CRM systems, office supplies, HR software, even toasters. All “enhanced with artificial intelligence.” But in reality, it is often a matter of hiding a void . Rethinking an old feature with a new name.

Many services that currently call themselves “AI-powered” are really just automation with a little ML thrown in, if that’s okay. And at worst, they’re basically institutionalized fraud: a pre-configured system that doesn’t learn, doesn’t adapt, doesn’t predict. They’re just making noise.

Smoking sells well, especially if it smells like the future.

Courses on “using artificial intelligence”: is it true?

And more: courses. Namely: “become an expert in artificial intelligence in 48 hours”, “write like a pro with ChatGPT”, “draw with Midjourney even if you can’t draw”. A stream of webinars, master classes, training courses and “complete” guides.

Now: It’s always a good idea to learn, for God’s sake. But if you need a course on “using” AI, you’re probably starting in the wrong place . Because AI, by definition, needs to be intuitive, conversational, and accessible even to non-technical people. If it takes 12 hours of studying to succeed, you probably shouldn’t be using AI. Or maybe you’re not using your brain.

The problem is not learning how to use the tool. The problem is understanding the context of its use . We must teach logic, critical thinking, ethics, digital culture. Not “copy and paste commands”. AI is used effectively when you know what to ask it, why, and what to get . Everything else is just social media folklore.

What’s the moral of this story? Let’s stop thinking we’re wizards.

The truth is, there is no magic wand . No AI will free you from the need to think. No algorithm will solve your existential or business problems if you don’t know how to formulate them first. Artificial intelligence is not the answer if you don’t even know what the question is.

Because technology, and AI in particular, is never neutral . It works like a mirror that doesn’t lie: it amplifies what you already have . If you’re disorganized, AI will make you waste time faster. If you’re creative, it will empower you. If you’re confused, it will return your confusion to you with perfect syntax. And if you’re lazy… you’ll procrastinate more effectively.

So before we talk about AI, we must learn to talk about us . From how we think. How we formulate problems, how we make decisions, how willing we are to delegate tasks. The real revolution is not technological: it is internal.

As Carl Jung wrote:

“He who looks outward dreams. He who looks inward awakens.”

Maybe we should start there: looking inside ourselves. Because the goal is not to become an expert in AI. It will become increasingly aware of how our minds work , our biases, our illusions. Only then will artificial intelligence stop being a poorly controlled magic wand… and become what it should be : a tool in the service of awakened intelligence , not a clone for those who wish to abandon their own.

So, before we sign up for yet another course on how to use artificial intelligence, perhaps we should stop for a minute. Breathe. And let’s ask ourselves a slightly scarier, but far more valuable question:

“Do I really need artificial intelligence?

Or should I first understand myself better?”

Types of Artificial Intelligence: Not All Are the Same

When someone says, “I use AI,” your first reaction should be: what?

Because the statement itself means nothing . It’s like saying, “I use electricity,” or “I work with chemistry”: interesting, but clearly vague. The AI universe is so vast and fragmented that using the term in the singular is not only misleading, it’s disrespectful of the complexity behind it.

Artificial Intelligence: Plural – Already a Revolution

Let’s start with this: there are many types of artificial intelligence . Not only because there are so many companies, models, tools, and software, but also because there are different types of AI , each with specific goals, logic, and capabilities.

The mother of all differences is between:

- Narrow AI (weak artificial intelligence)

- General AI (strong artificial intelligence)

Narrow AI: Does one thing well, and that’s it

This is the narrow AI we know today, which can be found in our smartphones, apps, websites, and cars. This is specialized AI: it does one thing (sometimes two) well, but it can’t do everything . Recognizing faces, suggesting products, writing texts, creating images, translating: all of this is great, but always in a well-defined context.

For example:

- Spotify recommends music to you = Narrow AI

- Google Translate = Narrow AI

- ChatGPT, Gemini, Claude, Copilot = Narrow AI

While they may seem omniscient, they’re not: each of these AI systems is optimized for a specific type of output . ChatGPT is optimized for language generation; Gemini for cross-modal integration; Copilot for coding; Claude for subtle semantic interactions. If you try asking Claude to program like Copilot or Gemini to write in ChatGPT’s tones, you’ll see that something is wrong.

General AI: (Possibly) A Coming Legend

And then there’s general AI , which is real and powerful. Which doesn’t exist yet . Or rather, it doesn’t exist in public . It’s intelligence that can understand the world as we do , adapt to new contexts, create new knowledge, learn on its own, and transfer knowledge in real time. In practice: universal artificial intelligence .

This is what scares us, so to speak, in the movies. This is HAL 9000, this is Samantha from the Book , this is a conscious machine, an advanced intelligence. But at the moment, this is more metaphor than reality . None of the current AIs have consciousness, intentionality, or the capacity for general abstraction.

ChatGPT ≠ Gemini ≠ Claude ≠ Copilot: Let’s figure it out

Let’s try to visualize this with a simple and deliberately exaggerated example:

ChatGPT sap writes code as if he were drilling a wall with a fork . Maybe something will work, but it will not be the right tool.

Here’s a simple overview of the most popular AIs today:

- ChatGPT (OpenAI) → A predictive language that is strong in text, reasoning, summarization, and storytelling. It excels at verbal tasks, and is weak at visual tasks and real-time updates (unless plugins or search are enabled).

- Gemini (Google) → Designed to handle multimodal input (text, images, video, audio) and integrated with the Google ecosystem. Strong at the infrastructure level, but less strong at spoken written language.

- Claude (anthropic) → More “ethical” and cautious, understands natural language very well, designed for coherent and safe conversations. Sometimes too “cautious” in his answers.

- Copilot (Microsoft) → Optimized for coding. Ideal for developers in a Microsoft environment, useful for suggestions, autocomplete, and refactoring. Not suitable for creative writing or philosophical musings.

Moral of the story? You can’t say “I use AI” without saying why, with what model, for what purpose .

AI as Home Appliances: A Useful Metaphor

Imagine you walk into your kitchen and say,

“I use electricity.”

Then use the toaster to make coffee, the microwave to knead pizza dough, and the hair dryer to cook eggs.

Doesn’t make sense, does it?

Well, that’s exactly what many people are doing with AI today: using the wrong tools, for the wrong purposes, with expectations that are completely out of line with reality.

This metaphor means that it’s not about the AI itself, but how you use it and the suitability of the tool for its purpose .

Dataset, goals, logic: every AI is a child of its context

Talking about “artificial intelligence” as something neutral, objective and universal is one of the biggest and most dangerous lies ever uttered by tech fiction.

Every AI is the daughter of its own context : technical, cultural, ideological, economic. And therefore also its limits. To truly understand this, we need to analyze three fundamental factors: the dataset , the goals and the design logic .

Dataset: What AI “sees” to learn

Every AI is “trained” by large amounts of data—text, images, audio, code, human behavior—to recognize patterns and generate responses. But what data? Where are you from? What language, what implicit cultural biases?

An AI trained primarily on English-language content will have a distinctly American worldview, with all the biases that come with it:

- algorithms that have difficulty with non-Western names,

- language models that ignore local idiomatic nuances,

- the absence of a socio-political context of “peripheral” realities.

And remember that some datasets are private, others are public, and still others are “stylishly stolen” from the internet. Which raises legal, ethical, and qualitative questions .

For example, if a model has been trained on Reddit, gaming forums, and informal conversations, you might expect a lighter, perhaps slightly toxic tone. On the other hand, if it has been consuming academic essays and newspaper articles, it will be more formal, but perhaps less creative or empathetic.

This data set is like the world seen through a window. But every window has its own frame.

Goal: Every AI has a goal (and that’s all)

There is no “universal” AI that can do everything well. Each model is designed with a specific purpose in mind : writing text, summarizing data, drawing images, generating code, supporting customer service, etc. This purpose determines not only the technical architecture of the model, but also its “behavioural” decisions .

Specific example:

- Claude from Anthropist Designed to be safe, wise, “moral” and highly aware of bias. As a result, he avoids controversial answers, often self-censors and prefers to express balanced opinions.

- ChatGPT is generally more flexible, more creative, more “human” in communication. However, in some cases there is more “risk” when it comes to claims.

- Built on the Google ecosystem, Gemini (formerly Bard) is designed to be informative, concise, and able to integrate with enterprise services. It is the most “enterprise,” the most embedded in the production universe.

Goals define style, boundaries, priorities.

Using AI without knowing its true purpose is like driving a tractor on the highway: it can move forward, but that is not what it was designed for.

Logic: Each AI has its own “personality” (desired or not)

Behind every AI is a team of engineers, linguists, philosophers, business strategists and lawyers. The person who makes decisions on issues:

- how to deal with ambiguity,

- when to say “I don’t know”

- how to answer sensitive questions,

- whether to show simulated emotions or not.

These decisions create a form of “algorithmic personality .” Not in the sense of awareness, but in the sense of repetitive behavior .

- Some AIs have empathy, others are formal.

- Some easily prove you right, others challenge you.

- Some looked excited, while others were cautious, almost “cold.”

This personality is not a side effect. And design choices .

So AI choices also mean adopting (consciously or unconsciously) a certain way of interpreting the world .

Neutral AI does not exist. And that’s good news.

Let’s repeat this loud and clear:

there is no such thing as neutral AI.

Just as there is no such thing as neutral journalism, neutral art, or completely objective science. Everything that comes into a human mind, even algorithmic thoughts, is subject to the influence of values, worldviews, priorities, and considerations.

But be careful: this is not a flaw. It is a feature .

The awareness of the lack of neutrality forces us to make more conscious choices:

- ask yourself “Whose AI is this?”

- “What was the purpose of its design?”

- “Does this really serve my purpose or is it just selling me something?”

There is no best AI: there is one that is right for you

All this complexity is meant to convey one simple but fundamental point:

there is no such thing as an absolutely best AI.

The AI is best suited to you, your way of thinking, your project, your context .

Creative companies will be brilliant at using ChatGPT, but may find Gemini too cold.

A tech team will love a co-pilot, but will hate Claude.

A journalist would find Claude more credible than GPT-4, but less fluent.

Beginners will find it easier to answer “like a human” and harder to answer “like a professor.”

Digital maturity today does n’t mean falling in love with technology , but understanding when, how, and why to use it . And knowing how to change it if it no longer works.

After all, as Einstein taught us:

“Everything should be as simple as possible, but not simpler.”

The same goes for AI: let’s simplify, but not oversimplify. Understanding the differences, context, and logic behind each AI is key to using them with true intelligence—our intelligence.

To be clear: AI is to be understood, not idolized.

So let’s stop talking about “artificial intelligence” as if it were a single currency, a one-size-fits-all package, or a magic formula. AI is an ecosystem , and like any ecosystem, it’s full of different species with different needs, limitations, and advantages.

If you want to use it correctly, you will have to learn to read the label , know the specifications and test it critically. It is not only about instructions, but also about human experience applied to the right tools .

Because in the end, as Marshall McLuhan said,

“It is not the tool that creates the message.

It is how you use it that determines the message you send to the world.”

Machine learning explained clearly (and without the nonsense)

We hear about machine learning all the time , but we rarely think: “What is it? How does it work? And why is it called that?”

We imagine it as something mysterious, somehow magical, like a lab full of hot servers where some dark intelligence is “teaching itself” who knows what.

In reality, it’s simpler. Or rather, it can be simplified without simplifying too much – and that’s our goal.

What is Machine Learning (ML) and Why It’s Not Magic

Machine learning is the heart of modern artificial intelligence . It is what allows a system to “learn” from data, i.e. to identify patterns, rules, correlations… and use them to make predictions, classifications or decisions.

But be careful: this is not about intelligence . The algorithm does not understand what you are saying.

Just noticed that every time the word “congratulations” appears in your message, it will most likely be immediately followed by a smiley face. And it predicted this. The end.

To understand each other: Machine learning is not brains , but applied statistics on steroids , driven by huge amounts of data and ever-increasing computing power.

Why is it called Machine Learning?

The name comes from the fact that the machine learns from data without being explicitly programmed for each situation.

A traditional programmer would say:

“If X happens, then do Y.”

Machine learning says:

I’ve seen this happen a thousand times. Y usually follows suit. So if I see something that looks like X, I tend to respond with Y.

There is no such thing as an “if-then” handwriting. There is a model that teaches, controls, analyzes, tries, makes mistakes, corrects. And then it got better.

Basic Types of Machine Learning (No Formulas, No Promises)

There are three main categories of machine learning, each with its own logic and some interesting metaphors:

1. Supervised Learning

It’s like teaching a child to recognize animals. You show him a series of pictures and say,

“This is a cat. This is a dog. This is a camel.”

Then you give him a new picture and ask, “What is this?”

The model is trained on labeled data (in other words, the correct answer is already written down) and tries to generalize.

This method is used, for example, to:

- Handwriting recognition

- Home Price Prediction

- Determine if an email is spam

It’s like taking a multiple choice test at the back of a book.

2. Unsupervised Learning

There’s no labeling here. You just give them a bunch of data and say,

“You found some interesting clusters.”

It’s like inviting someone to a party where they don’t know anyone and saying,

“Divide the people into groups as you see fit.”

Models look for hidden patterns, similarities, and relationships. He didn’t know what he was looking for, but he kept looking. Used for:

- Customer Behavior Analysis

- Find new market segments

- Data Dimensionality Reduction

It’s like looking at a deck of cards without knowing the rules of the game… but still finding the same suit.

3. Reinforcement Learning

Here the model is like a rat in a maze: it does something, gets rewarded (or punished), and over time learns how best to behave. It is used for:

- Make AI play chess or video games

- Driving a self-driving car

- Optimizing robots in real conditions

It’s like a video game: you make mistakes, you die, you start over, you learn.

Data, models, learning and forecasting: let’s take a closer look

We often hear words like “model”, “dataset”, “training”, “inference”… and it’s very confusing. Let’s explain it with a simple metaphor: a sports facility .

The dataset This is food:

This is raw data, the initial information. The more diverse, balanced and high-quality, the better.

An undernourished AI, like an athlete who eats only chips, will show poor results.

A model is a body that needs to be trained :

Neural networks, mathematical functions, algorithms. Weak, rough at first. It needs to be trained.

L’ I train at this fitness place:

Repeat the exercise (e.g. analyze the data), correct errors, optimize parameters.

It’s a continuous cycle: input → output → feedback → adjustment.

The forecast is :

Once trained, the model can respond to new inputs. It doesn’t always succeed, but if it’s trained well, it will do its job well.

How to Spot Cats (and Spammers)

Ironically and clearly, to conclude:

- Machine Learning Here’s how AI can figure out there’s a cat in a photo, even if it’s a ginger cat sleeping on a printer.

- This is what allows Gmail to send the Nigerian prince’s email straight to spam.

- Here’s why Netflix knows you love Scandinavian thrillers, even if you don’t know it yet.

Spoiler: it’s science, not magic, but it works pretty well.

Machine learning that doesn’t think about it . But it observes, analyzes, learns, and predicts. Not because I understand it, but because I’ve had a good argument . It makes predictions based on a wealth of experience, like an old barista who knows that when you come in at 8am, you want coffee, not a Negroni. Understanding how it works is useful not only from a technical perspective. It helps you avoid pitfalls , know what to expect , and, above all, use it for its intended purpose :

A great helper if you know what to ask for.

It’s a total mess if you expect me to think for you.

Fake Experts and Momentum Bubbles

We live in strange times. We are surrounded by “AI experts” who can’t tell the difference between a controlled algorithm and a TikTok script. People who yesterday were selling courses on how to “become a digital nomad” and today have miraculously become “AI coaches,” “AI strategists,” or “exponential technology visionaries.”

If that made you smile, you’re in good company. But it’s not even funny enough . It’s a cultural distortion that does serious harm: creating false hopes, unnecessary fears, and, most importantly, a giant cognitive bubble of nonsense, buzzwords, and ignorance disguised as the future.

Every person is an “expert” in something he does not understand.

Let’s start with a paradox:

AI is a tool meant to simplify, but it becomes very complicated because of who talks about it.

Some will offer you a foolproof method to master ChatGPT, some will offer you a foolproof guide to SEO, some will teach you how to build a six-figure business using AI (even if you know nothing).

These are the same people who were selling NFTs six months ago, cryptocurrencies before that, and random motivational courses before that.

The truth is, no one can dominate . Artificial intelligence is designed to be accessible. If you have to spend weeks learning something to use it, it’s not AI, it’s poorly designed software . Or, to put it simply, you’re trying to make it into something it’s not: a substitute for intelligence.

Here’s the thing: You don’t have to be an expert in AI to use it effectively . You do have to be an expert in what you want to do with AI .

Expert myths: let’s take them apart piece by piece

In the world of AI, the expert myth is fueled by three false beliefs:

-

“To use a device properly, you need to know how it works

.” No. You need to know what you need. Just as you don’t need to know how a diesel engine works to drive a car, you don’t need to know how to write a neural network to use ChatGPT. You need context, not code. -

“Only people who can code can truly understand this.”

FALSE. Many of the best writers are not techies. They are philosophers, writers, designers. Because AI is language, vision, communication. You need to know what it is, not how the algorithm is written. -

“AI will replace everything and everyone, so you need to take cover”

This is fear turned into marketing. Artificial Intelligence It will not replace those who can think , but only those who have never found the time to do so. If your work is based on mechanical and repetitive processes, then yes, AI will be your competitor. But if your value lies in critical thinking, vision, creativity… AI will be your best ally.

Courses, Boot Camps, Promises: An Exhibition of Illusions

Artificial intelligence has never been such a compelling selling point .

An online course with a slick landing page promising “your future with AI.” Masterclasses starting at €997 “today only.” Trainers telling you how to “maximize your ROI with GPT-4”… without even working for the company.

This is the new digital mirage industry , and it’s not just folklore: it’s dangerous.

Because it does two terrible things:

- This makes people believe that success can be automated , when in reality it is always the result of training, strategy and effort.

- Turn AI into an idolatrous fetish , losing its true value: it will become a tool serving human intelligence , not a replacement for it.

AI is a mirror, not a mentor

And here’s the problem: People are looking for clues, meaning, or shortcuts. But AI is not a mentor. Nor is it a mirror . It gives you back the real you, empowered. If you’re confused, it will give you more clarity in your confusion. If you’re clear, it will give you clarity expressed in great style. We want awareness, not certification. It serves a culture of thinking , not some freak show offering quick fixes to engineering problems.

What courses are really needed today?

- The best way of thinking.

- How to clearly formulate the problem.

- How to ask questions that open up possibilities rather than close off answers.

- How to distinguish elegant solutions from unnecessary complications.

What if everything changes tomorrow?

And here comes the unspoken problem: AI changes every day. Models are updated, hardware is expanded, interfaces evolve. What “works” today may be outdated tomorrow. “Magic tips” last as long as viral posts: 24 hours, then disappear. So what’s the point of training if everything will be different tomorrow? The only real preparation possible is a flexible mindset , a culture of doubt , the ability to navigate a new environment .

True competence is human competence, not technical competence.

In a world where everything can be automated, the only thing that matters is what isn’t automated :

- Intuition

- Ethics

- Critical value

- Vision

- Report

- prettiness

- Sympathy

You don’t have to be an expert in artificial intelligence. In the age of machines, you have to be more human

. And that’s much harder, by the way.

So what?: If someone sells AI as a magic formula, then they are just selling smoke.

Be wary of anyone who promises you the “secret key to mastering AI.” Be wary of anyone who sells you the illusion of superiority just because they learned three English commands to paste into ChatGPT.

Be especially wary of anyone who wants to pose as an expert, because those who are honest don’t need to say so. AI is not meant for teachers. Who is it for? It still wants to learn . To question itself. To collaborate with powerful tools without being dominated. The future will belong not to technocrats, but to the curious. And, as always, to those who can distinguish substance from noise.

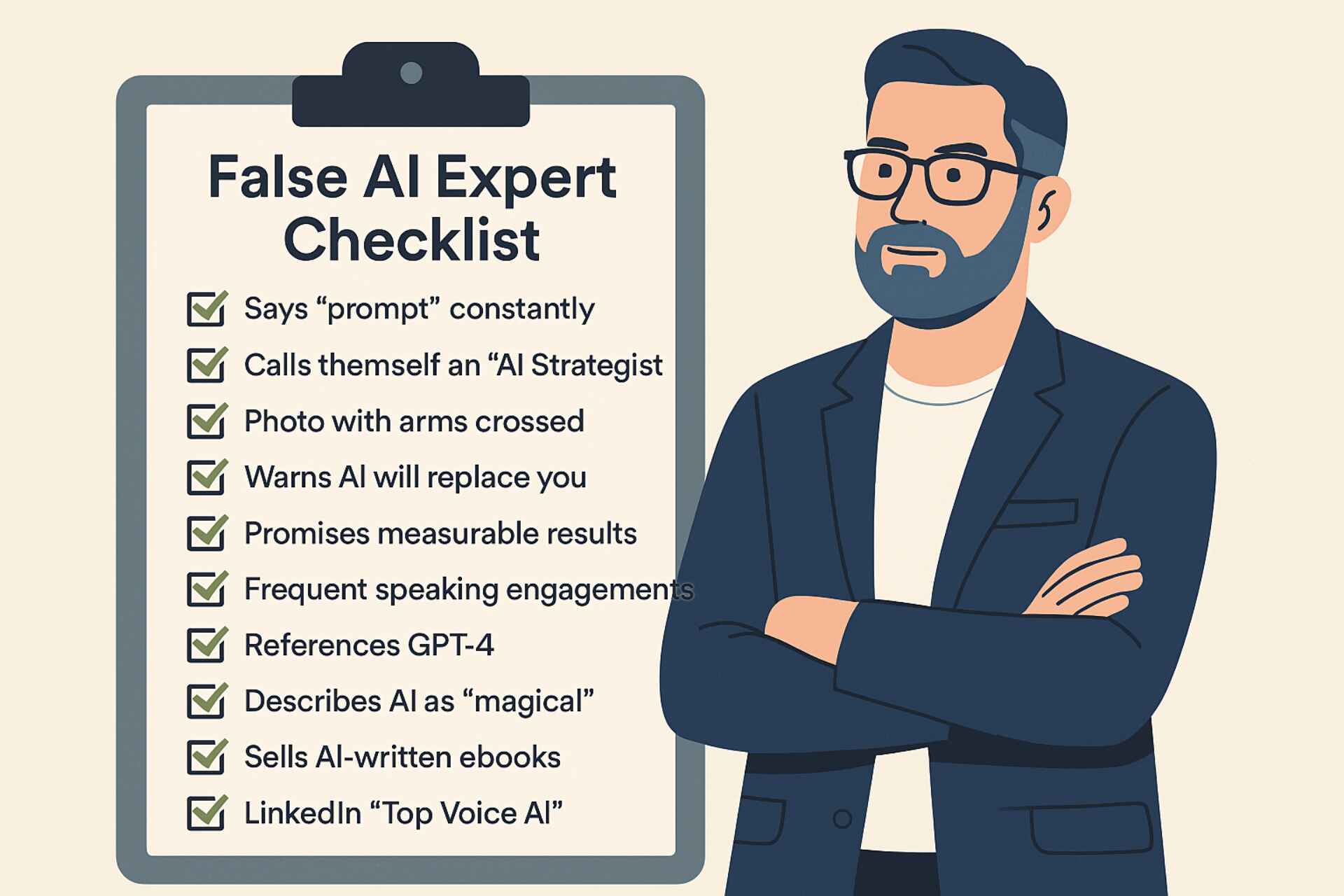

The Satirical ‘Fake AI Expert’ Checklist

(If you recognized at least 4 of these points… run!)

- Say a hint every three words , even when ordering at a bar:

“Cappuccino – with foam – hint: “like in Italy, but soft . ” - He calls himself an “AI strategist,” “evangelist,” or “fast engineer ,” but his background is in motivational coaching, pyramid selling, or “sales funnel expert since 2017.”

- He has at least one profile photo , often black and white, with thick glasses and arms crossed over his chest, looking up at the sky like Elon Musk at a discount store.

- He tells you that “AI will replace you if you don’t learn how to use it ,” but then sells you courses to learn how to use it… as if intelligence can be bought on installments.

- It promises measurable results , such as “this command will increase your conversions by 236%,” as if the AI were a precisely calibrated slot machine.

- He performs at different events every week , but no one really knows what he does between his main gigs.

- He refers to GPT-4 without knowing what the model is, and confuses Gemini with cryptocurrency (“Yes, I invest too!”).

- He talks about AI like it’s a magic wand , but when you ask him to explain the difference between supervised learning and reinforcement learning… he changes the subject.

- Create and sell “Your first AI-powered eBook in 48 hours ,” but he wrote his LinkedIn post with his feet and used emojis for punctuation.

- He introduces himself as the “highest-voiced AI” on LinkedIn , but in reality he only follows other “top voices” who comment on each other.

Recognize anyone? Don’t worry: AI will replace them. But not because of their skills, but because of the lack of content.

How and when does using AI make sense and when it doesn’t

When to use: Recognizing a real need

Using AI only makes sense when there is a specific problem to solve , a repetitive task to automate, or human limitations to be overcome with technology. AI is not a password , but a surgical instrument: it works if used correctly, for the right reasons, and at the right time.

It is best used when:

- you need to analyze large amounts of data in a short time;

- you want to create original content supplemented with human intervention;

- you are looking for a virtual assistant for organization, consulting, combination;

- You have mechanical tasks that take up time but do not require genuine creativity.

There is no point in using it if:

- You want to avoid thinking;

- You expect him to do all the work for you;

- You think of it as a way to do something faster without knowing what you’re doing.

AI is not an excuse for incompetence , but an exoskeleton for existing expertise. If your job depends on automation, you risk losing it. But if your job involves critical thinking, relationships, vision… then AI can be your best ally. Artificial intelligence makes sense when it is integrated , not when it is a replacement . Its value is when it helps you focus on what really matters : ideas, choices, meaning. AI is useful when it unlocks the potential of natural intelligence , not when it replaces it.

What to Use It For (and What Not to Use It For): A Clarity Guide

One of the worst habits spreading today is the use of AI for everything , indiscriminately. They said it would take care of everything. But the truth is that not everything is good for everyone . There are areas where AI works very well, and there are others where it works very well. This causes invisible but deep damage .

Where it works well:

- Publishing and Content

Great for drafts, ideas, summaries, headlines, editorial plans. It does not replace the writer, but stimulates him. - Programming

Code suggestions, debugging, refactoring, automatic documentation: a revolution for developers. - Medicine and research

Assisted diagnostics, genetic analysis, predicting clinical outcomes, discovering molecules: this is where AI plays an important role. But the doctor is always nearby . - Functional design and creativity

Creating layouts, color schemes, conceptual variations: stimulating intuition, not replacing it. - Business Intelligence

Automated reporting, dashboards, predictive analytics: strategic tools for better decision making.

When it just leads to disaster (or almost disaster):

- Automated Customer Service

No one likes to interact with a chatbot that lacks empathy. Better a good person than an annoying AI. - Compulsory Artistic Creation

To create a work of art, it is not enough to simply create beautiful images. Without any meaning, it is simply generative decoration . - School and Learning

Asking ChatGPT to write an essay is a reaction: students don’t learn, teachers don’t grade, and all the educational value of failure is lost.

AI can support ethical or sensitive decision making, but it should never make decisions for us in areas such as justice, psychology, hiring, or moral judgment.

So how do we stop this? Use AI to speed up processes, not delegate them . If you make a decision, you are still responsible, even if an algorithm “suggests” it.

AI as a creative tool, not a replacement

There is a recurring and dangerous illusion: the belief that artificial intelligence can generate creativity for us. It can’t. AI can imitate, combine, mix, and even surprise. But it couldn’t feel, taste, or want . It doesn’t make sense. And creativity without sense is just beautifully packaged noise.

Using AI in the creative field It makes sense if you think of it as an accelerator of understanding . To explore more options faster. To visualize ideas. To compare alternatives. But it’s always useful for humans to say, “This one is like this. This one is not like that. This one is like me.” Intuition is still ours. The choice is ours. The courage to break boundaries is simply a property of human nature. An illustration produced by following a command may be aesthetically pleasing. But it is not art unless there is authority behind it . A text written by an LL.M. may be well written, but it says nothing unless it carries a vision .

Creativity is a connection between experience, pain, joy, personal history and cultural context . It is the result of human activity that is experienced, processed and then expressed. AI can help. But it has nothing to say except what we have taught it. And this, paradoxically, is its greatest limitation – and our strength.

AI that frees up time for thinking, not eliminates the need to think

One of the most underrated benefits of AI is this: it buys you time . It eliminates mechanical, repetitive, and predictable tasks. It lets you focus on what really matters. But be careful: it shouldn’t take away your need to think. Today, we use AI too much to avoid the effort of reasoning . We ask ChatGPT to write for us, to decide for us, to plan for us. In doing so, we silence our inner voice . The part of us that needs exercise to stay alive. Critical thinking is like a muscle: if you don’t use it, it atrophies. AI should give you time to train it, not be an excuse to ignore it. Using it correctly means:

- automation of what does not require depth ;

- take time to do what you do that requires understanding, vision, judgment .

This is a paradigm shift. It’s no longer about “doing more in less time.” And thinking better with more time and clarity . Imagine a day when AI does your research, briefings, and boring emails for you. And you can focus on ideas. On choices. On relationships. This is a real superpower. Don’t write faster. But think deeper .

When AI Becomes a Danger: The Dark Side of Automation

Finally, one thing needs to be made clear. Artificial intelligence is inherently bad . Like any powerful tool, it can become a serious problem if used incorrectly, too often, or in the wrong context. There are three main risks:

- Dehumanization of the process : replacing people with cold chatbots, simulated intelligence that can’t listen.

(See: annoying customer service, voice assistants that make you scream.) - Cultural flattening : using AI to create mass-market content without vision, tone, or soul.

(See: cloned articles, generic videos, soulless presentations.) - Cognitive addiction : the habit of no longer trying to think, analyze, choose.

(See: students copying assignments from ChatGPT, managers making decisions based solely on AI analysis.)

If we do not put ethics and consciousness at the center, AI will become a distorting mirror: it will show only what is already visible, it will only enhance what already exists , excluding errors, but also discoveries. In other words: it can optimize what already exists, but not change it . And without transformation, there is no innovation. There is only simulation.

Cultural issues before technological issues

1. You need more philosophical information than Python code.

At this point in history, technology is advancing far faster than our ability to understand it. While AI models grow, improve, and evolve, our digital culture remains fragile, impressionistic, and unprepared . And this creates a paradox: we are surrounded by incredibly powerful tools, but we don’t yet have the vocabulary to truly control them. It’s not enough to just know how an algorithm works. You need to know what it means to use it, what consequences does it have , what values does it convey , even unintentionally.

The central figure here is Luciano Florida . The “philosophy of information ” teaches us that information is not raw data , but a relational event that changes the one who receives it. It is a process. It is an impact. It is a game. And AI, as a producer and mediator of information, is also a producer and mediator of moral consequences .

The problem is no longer technical, but axiological. It is not about writing code that works, but about writing responsible code. Code that does no harm. Code that includes human thought. The biggest mistake we make is believing that AI is a “technology for learning” when in fact it is a medium for understanding . We need fewer tutorials and more epistemology. Fewer orders, more questions.

2. Society is not ready to digest the complexity of AI.

We are used to technology being simple, direct, and efficient. Switches that turn things on and off, apps that fix things, services that respond. AI breaks this logic: it is ambiguous, changeable, and unpredictable. And that is disorienting. The societies we live in — from schools to media, from business to politics — have not yet come to terms with what it means to live with systems that can generate text, images, decisions, patterns automatically and without direct human control.

The Complexity of Artificial Intelligence This is not just engineering . It is now conceptual . It requires a new literacy, an education in complexity. As Yuval Noah Harari

says , AI risks becoming the most powerful manipulation technology ever invented precisely because it knows how to adapt to human cognitive vulnerabilities. It does not impose content on us: it seduces . And that is far more dangerous.

We need a culture that can support shadow , contradiction , nonlinearity . We need a society that is less impatient and wiser. Less thirsty for solutions and more concerned with consequences.

3. Bias, Ethics, Transparency: AI is not neutral

One of the biggest misconceptions today is that artificial intelligence, because it is “logical,” is also neutral. But an algorithm is never neutral . It is created by humans using data generated by humans, in a specific historical and cultural context.

As Norbert Wiener , the father of cybernetics, taught us , any system that automates human processes also absorbs their ambiguities, prejudices, and moral limitations. AI is not a lie detector. It is a filter , and each filter chooses what to let through .

Bias is everywhere:

- in the dataset (racial, linguistic, gender imbalance),

- in a model (which reinforces stereotypes rather than overcomes them),

- in the results (which appear objective, but in fact are not).

So: who controls the controller? Who is responsible if the AI rejects resumes for unconscious reasons? Who decides whether a prediction is correct or simply socially acceptable?

Transparency is not optional: it is a form of preventive justice . Whenever we delegate something to an algorithm, we must ask ourselves:

“By what right? In whose name? Under whose supervision?”

4. Authority and delegation: the real revolution is a social revolution.

Who controls the current algorithm? To have a new form of power . No longer just economic or political, but cognitive . It determines what we see, what we read, what we buy, what we consider true. And all this is in a certain sense opaque, invisible, as if “neutral” .

The delegation we give to AI is never neutral . It is an act of trust — and therefore of dependency . Every time we delegate decision-making to AI, we give up some of our free judgment.

The question is not “what can AI do?” but “ what should we stop doing? ” when we give it permission to act.

As Byung-Chul Han says , a transparent society is also a surveillance society . If we really want to coexist with AI in a healthy way, we need to rethink the social contract between technology and humanity. We can’t continue to think of AI as a simple tool. And as a partner. A new social entity. And we must learn to live with it with clarity, rules, and shared responsibility.

5. AI is not intelligent. We should be. But often we are not.

Here we come back to square one, and this is perhaps the most inconvenient truth. AI is not really “smart.” It does not understand, it does not feel, it does not judge. It did what it was trained to do: statistically, coldly, based on data. The problem is that we are becoming less and less like that. We have confused intelligence with speed. Information with knowledge. Content with meaning. And we are gradually moving towards abandoning complexity , slowness, responsibility for mistakes. Unless we improve our ethical, cultural, and critical intelligence, AI will become an amplifier of our emptiness. It does not guide us: it imitates us . It does not show us the way: it imitates our most popular routes .

So the question is not:

“How smart will AI be?”

But:

“How much more could we do if we knew it was there?”

I conclude the argument with the following statement:

Artificial intelligence is not a solution.

It is not a savior. It is not even an enemy.

It is a lens.

And like any lens, it does not create anything on its own.

It is limited to expanding what already exists .

If you’re confused, AI will help you.

If you’re smart, AI will make you faster.

If you’re an idiot, AI will make you a much more efficient idiot.

But if you’re a conscious, creative, strategic, thinking being, then yes: AI can be a tremendous lever.

But only then will you do your homework.

Only then will you ask yourself who you are, what you want, what your job really is.

Only then will you learn to ask the right questions, to question the obvious answers, to recognize the difference between what is useful and what is merely novel.

AI is not a substitute for intelligence.

It exaggerates it, exaggerates it, renews it.

And if that intelligence is sick, lazy and superficial… it will do nothing but spread its symptoms throughout society.

That’s why the real question is not how to use AI?

But:

“Who uses it? Why? Based on what worldview?”

And especially:

“What do I want to remain human even when everything becomes artificial?”

That’s the crux of the matter.

Don’t learn how to use AI.

Learn how to stay smart.

Final Abstract

In an era when artificial intelligence is presented as a universal solution to every problem, this essay challenges us to radically change our perspective: it’s not AI that needs to be understood, but ourselves.

We explore different types of AI, expose fake experts, and analyze ethics, power, and delegation until we rediscover an uncomfortable and liberating truth:

AI is not intelligent. We are the ones who have to do it.

AI is a powerful, useful, and transformative tool, but only if it is used with critical thinking, vision, and responsibility .

It does not solve our limitations: it only increases them. It does not make our lives easier: it forces us to reframe them.

The future belongs not to those who know how to best use AI, but to those who know how to best think with AI around them , without idolizing or deifying it.